My Dream AI Client

After months of deeply using different AI clients (ChatGPT, Claude Desktop, Raycast, Relevance, etc) I keep running into limitations in a few key areas. Here's the three key product features I believe would make the dream AI client.

Feature 1: Universal Chat

ChatGPT and Claude have clearly proven the need and use case for this, so I won't dive in too deeply. However the additional features I want are:

- The ability to select my preferred model

- The ability to load in as many MCP servers & tools as needed - given how fundamental they now are to daily use (I don't want to be restricted to 40, the official Atlassian one has 26 alone 😅)

- The ability to store my memories externally or reference them cross-client

- The ability to connect more knowledge sources such as Notion & Confluence (rather than traditional file storage), then easily search and reference this knowledge.

Both of these could be solved with MCP servers or more native integrations with other platforms. In my opinion (and trying not to be biased) StackChat is one of the leaders in this space given its very strong remote MCP support and ability to select models from different AI labs.

The final feature I want is:

- the ability to tag a workflow like you do in messaging apps eg

@post-meeting-agentto kick-start that workflow... more details on that below.

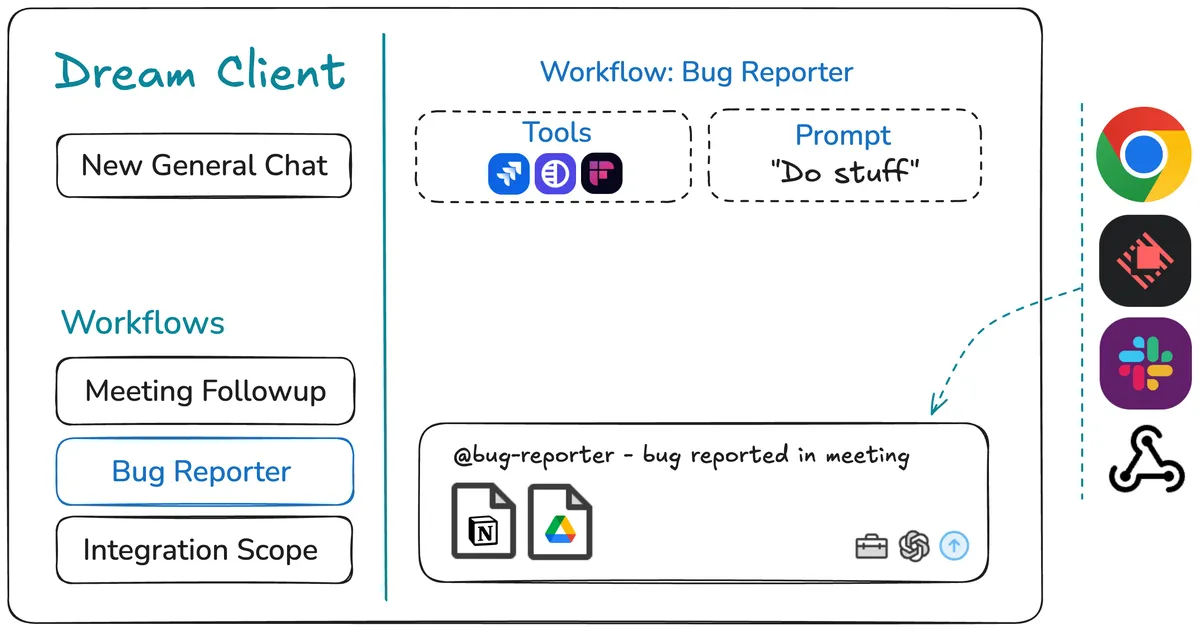

Feature 2: Workflows

A workflow (what some call "sub-agents") is a sequence of steps I regularly do: creating meeting followups from transcripts, raising bug tickets, updating documentation, etc. These often have a set scope and are pretty repeatable.

This is the feature that I believe is yet to be well executed - many platforms excel at a few of the sub-features however I haven't found one that masters them all. Here are the fundamental features that would make up workflows in an ideal LLM client:

Workflow Structure

- Workflows would be bucketed

- Similar to how Claude and ChatGPT use projects

- Each workflow would have a system prompt guiding it on what to do

- Each workflow has a defined list of tools it can use (not servers; specific tools)

- Ideally, these could be flagged as core tools or secondary tools

- Similar to tools, a workflow knows about common resources it can use

- This could be specific knowledge documents, all child documents in a folder, key web pages, a codebase, etc.

- You can choose your model per workflow

- You may want to choose a different model for something doing code vs generating content

Using Workflows

- A workflow can be triggered to run on a schedule, via a webhook, or launched via a chat (by simply tagging the workflow in the Universal Chat)

- If it's provided feedback at the end of a workflow, it can update the system prompt or store an example of what a good run (output for a given input) looks like

In terms of workflows, I feel Relevance AI gets pretty close, however it's understandably too focused on native tools rather than remote tools - this tool portability is a deal breaker for me (Relay.app might provide a well-balanced alternative in this space).

Feature 3: Initiation Points

While this doesn't seem like much of a feature, I believe it's key to having the most frictionless UX, and therefore is a fundamental feature.

To achieve the best UX, I believe this would need to be running as a desktop app, however it would also be great to initiate the universal chat from a:

- Web browser - taking the relevant content or simply the URL for context

- Raycast

- Tagging in a Slack thread

Similarly, it would be great to be able to pool a few resources from different systems together and have them run in the same workflow - ie forward a Slack message into the prompt then add a Jira ticket I'm looking at in Chrome.

I appreciate there is an argument that workflows should be done in a specialised app, but I feel this should all be achievable in a single app. Currently, I'm using Claude for this, but would love to find a tool that does all of these features!

Related Post: Rethinking MCP: From App to Workflow Servers