Rethinking MCP: From App to Workflow Servers

Why: Users don't care about servers, they care about actions and outcomes

After spending months building and using MCP servers for daily tasks, I've hit a wall that I suspect many others will too. Not a technical wall - an architectural one which fundamentally impacts the user experience. This is making me contemplate - what's the best approach for building and interacting with MCP tools?

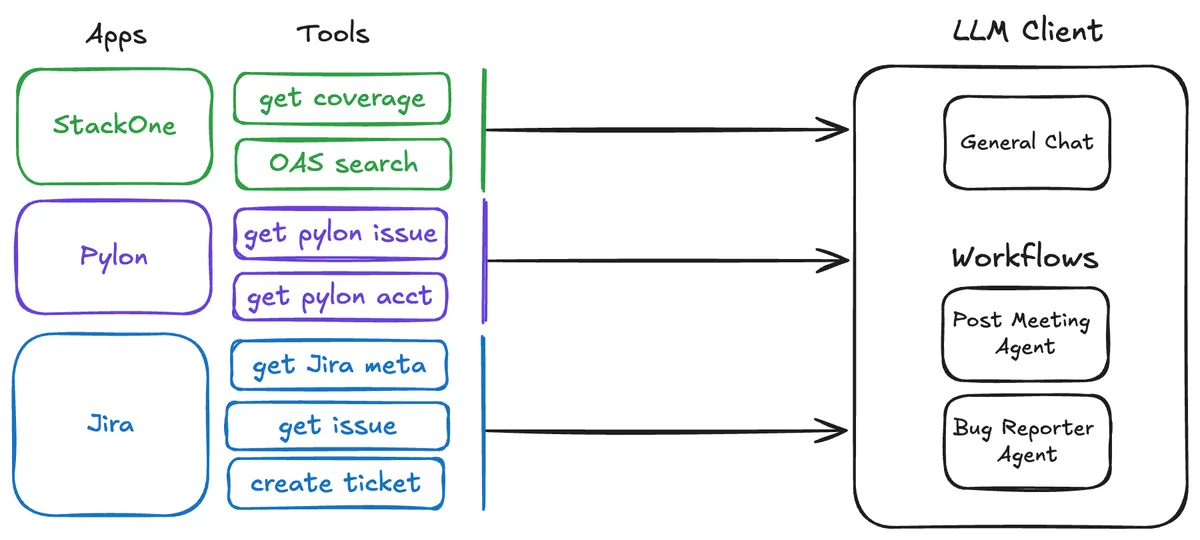

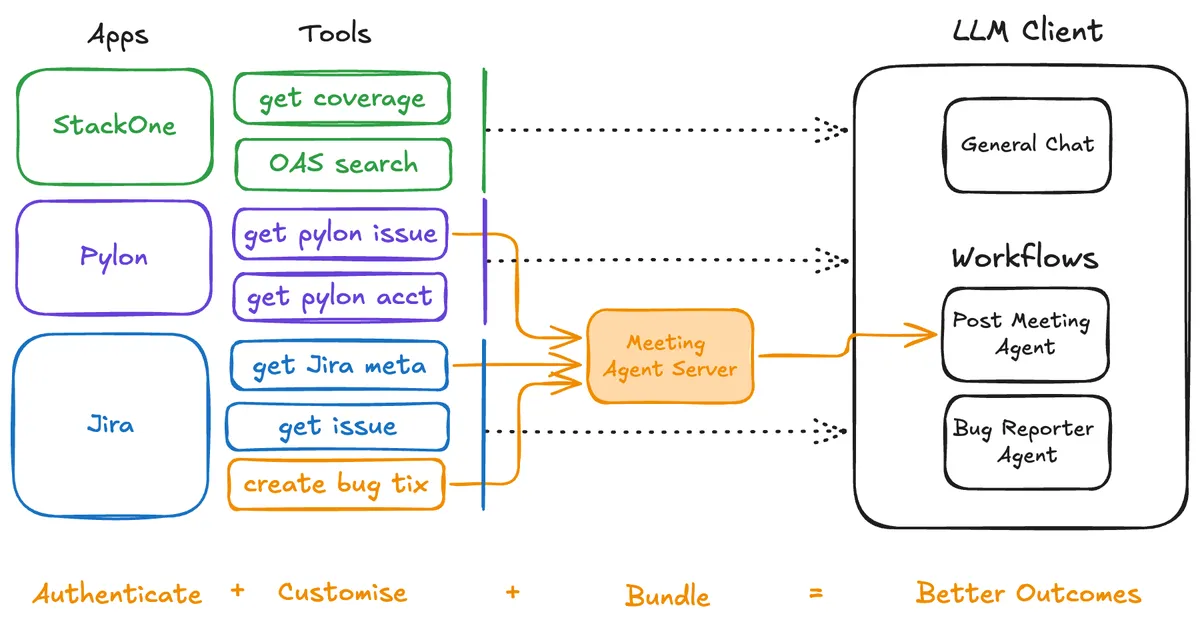

The solution I explore is the idea of decoupling tools from traditional app based servers, then allowing users to bundle tools from multiple apps into workflow specific servers.

the problem

Each discrete workflow (a sequence of steps I regularly do) I work on, always involves more than one platform. Given I use multiple platforms daily and have many workflows, I end up needing multiple servers. Each server typically has read and write operations for a few different entities, meaning we now have heaps of tools!!! This causes a few pain points...

tool limits

Some platforms like Claude have hard limits on the number of tools that can be enabled at any given time. Claude has a hard limit of 40, meaning that you need to be really selective with the ones you want enabled. This is inconvenient as you need to toggle multiple tools on and off for each run. Which becomes dangerous when some tools are dependencies for another - e.g. you need to get the meta details on Jira, before you create a ticket. Unfortunately the user (and LLM) has no knowledge of this so it will yield worse results, and potentially be unknown to the user.

performance degradation

When an LLM has access to a heap of tools, it performs worse. While I don't have a way to quantify it, you can pretty easily see LLMs struggling after a few workflow runs. This can look like a few things

- It goes and performs web searches unnecessarily when it should have all the context

- You mention something in the prompt (i.e. "ticket") and it doesn't know in what instance you're referring to an engineering ticket in Jira vs a customer support ticket in Pylon

For a given workflow, I like having a tight scope on both the tools and prompt, to ensure it's only calling tools that will benefit it in an efficient way.

tool customisation

Unless you're technically capable, users can't really customise their own tools. For some Jira ticket creation workflows, I want to create a bug ticket on the engineering board, however for others I want to raise integration tickets. Fundamentally, when using the standard Atlassian connector, these are using the Create Jira ticket tool, however using this generic tool increases chance of creating a ticket incorrectly. It would be great to be able to customise these tools slightly for each workflow to ensure they are tailored to my use case, and therefore the outcome is more consistent.

"users don't care about servers"

In the same way we don't care about the infrastructure for most things, we just care about its utility users care about the outcome, and the tools needed for their workflow, not servers. A server is just infrastructure — a means to get your hands on the tools that actually solve your problems.

new approaches for the future

For a given workflow, we need to give an agent (me or AI) access to the right tools to be able to efficiently work. There are two extreme directions you could go here

- Open - Give models all the tools and all the context - control each workflow with the prompt

- Scoped - Scope the agents to a workflow with narrow toolset and very specific prompt.

I think there's a place for both approaches, but for regular workflows, I want to be confident I'll receive a consistent outcome, and I'm yet to be convinced that option one can lead to consistently good outcomes for specific tasks.

option 1: a better LLM client

Ideally this is a problem that can be solved within the LLM clients themselves. I wrote a post on what my dream LLM client would look like, which would significantly improve how users interact with external tools via MCP.

option 2: workflow or role based tool servers

The solution I'm interested in is the idea of decoupling tools from traditional app based servers, then allowing users to bundle tools from multiple apps into workflow (or role) specific servers. This would allow users to only select the tools from the platforms that really relate to what they need to do for their job.

Our sales team may want to be able to read Pylon and Jira tickets, but creating Jira tickets is unnecessary, hence they only need a small number of tools from the Jira connector.

With this approach, users get access to the right tools they need for their job, which helps prevent tool bloat and helps to keep you under any tool limits. Also, when asking an LLM to "create a ticket" if it's only got access to create tickets in one system, the LLM performance will be better. This becomes extremely beneficial when we add these servers to a workflow...

Authentication

Fundamentally, authentication to an app needs to happen at the app level. The one improvement that could easily happen here is controlling the tools that can be listed at the app level. E.g. I authenticate Todoist, I select the tools that I will use (e.g. I use tasks but not projects), then my servers will only have access to task related tools.

Customisation

For some workflows, you may want to increase the efficiency of a tool where part of the result is effectively "hard coded" based on the workflow you're doing. Most of the tools are understandably pretty generic, so it would be great to be able to tailor existing tools to be more suitable for your use case. Given this bundling would need to occur in another system (ie an MCP Registry), it would be great to be able to vibe code any customisations.

Bundle

Now that the tools have been decoupled, we can effectively construct our own workflow specific server based on just the tools we need. This helps keep an LLM focused and scoped with tools from multiple systems, but just the ones they need.

Another interesting area to consider is the Prompt aspect of the Model Context Protocol. Now that we have scoped our collection of tools to be very specific to a workflow, we could create and bundle a system prompt with the tools, so that all workflows run consistently across clients & all users get any updates to prompts.

Portability

At StackOne we're all exploring many different platforms regularly, I'm using Raycast and Claude at the moment and starting to explore Relay.app, others are using ChatGPT, Retool, Relevance.ai, Cursor & Claude Code. By having these workflow specific tools and prompts bundled and configured remotely, it helps with the portability to have the workflows configured on different platforms and expect similar results.

option 3: better tool calling

Another option is just getting better at knowing what tools to call for a given task. In my opinion, this can only positively assist. It will be fundamentally required as the number of apps and tools increase, and our workflows become more varied.

One key thing would be having a good feedback mechanism to ensure it's learning the nuance of when to call what tool.

is this the future?

I could be overthinking this, as the current server-per-app approach may scale just fine. However, having already experienced these pain points as a user, I think there's plenty of room for optimising this space to be more outcome oriented rather than optimising for scalable simple infrastructure.

The beauty of the workflow-based approach is that it mirrors how people actually work. You don't think in terms of platforms; you think in terms of getting stuff done.

In the future, I think we will see all three options working together in parallel and the results from that will be pretty impressive.